Ollama

Models running in Ollama on your local machine work great with Promptscape, because they can be used free and offline! They are a great place to start.

You Will Need

- Ollama installed

- A model downloaded and running in Ollama, such as Llama3.3 (

ollama run llama3.3)

Adding an Ollama model

- Run the command

Promptscape: Create a Model - When prompted to choose a provider, choose 'ollama'

- The next prompt will ask for the model name. This is the same model name you use when you run a model in ollama. For example, for llama3.3, the ollama command is

ollama run llama3.3, andllama3.3is the model name you should use here. - Next, you'll be asked for the hostname. If you didn't configure a custom Ollama host when you ran your model, just leave this blank, and Promptscape will use the default localhost port.

- Finally, enter a memorable & descriptive nickname for your model.

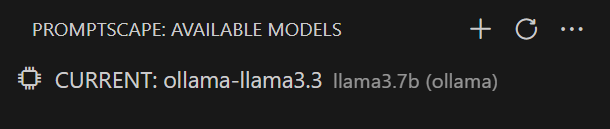

If you have completed all the steps, you'll find your model in the Explorer. Click it once to activate it.